The Hao AI Lab analysis crew on the College of California San Diego — on the forefront of pioneering AI mannequin innovation — just lately acquired an NVIDIA DGX B200 system to raise their crucial work in giant language mannequin inference.

Many LLM inference platforms in manufacturing right now, equivalent to NVIDIA Dynamo, use analysis ideas that originated within the Hao AI Lab, together with DistServe.

How Is Hao AI Lab Utilizing the DGX B200?

With the DGX B200 now absolutely accessible to the Hao AI Lab and broader UC San Diego group on the College of Computing, Data and Knowledge Sciences’ San Diego Supercomputer Heart, the analysis alternatives are boundless.

“DGX B200 is likely one of the strongest AI programs from NVIDIA so far, which signifies that its efficiency is among the many finest on the planet,” mentioned Hao Zhang, assistant professor within the Halıcıoğlu Knowledge Science Institute and division of pc science and engineering at UC San Diego. “It allows us to prototype and experiment a lot sooner than utilizing previous-generation {hardware}.”

Two Hao AI Lab tasks the DGX B200 is accelerating are FastVideo and the Lmgame benchmark.

FastVideo focuses on coaching a household of video era fashions to provide a five-second video based mostly on a given textual content immediate — in simply 5 seconds.

The analysis part of FastVideo faucets into NVIDIA H200 GPUs along with the DGX B200 system.

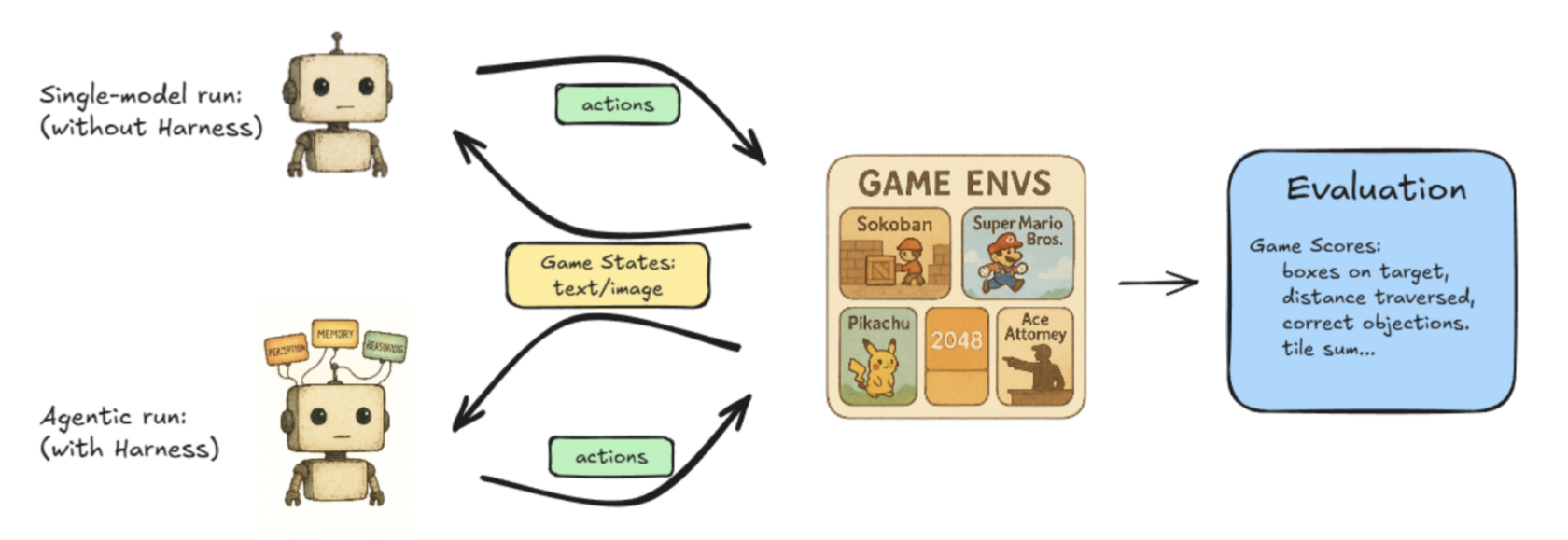

Lmgame-bench is a benchmarking suite that places LLMs to the take a look at utilizing widespread on-line video games together with Tetris and Tremendous Mario Bros. Customers can take a look at one mannequin at a time or put two fashions up towards one another to measure their efficiency.

Different ongoing tasks at Hao AI Labs discover new methods to realize low-latency LLM serving, pushing giant language fashions towards real-time responsiveness.

“Our present analysis makes use of the DGX B200 to discover the following frontier of low-latency LLM-serving on the superior {hardware} specs the system offers us,” mentioned Junda Chen, a doctoral candidate in pc science at UC San Diego.

How DistServe Influenced Disaggregated Serving

Disaggregated inference is a means to make sure large-scale LLM-serving engines can obtain the optimum combination system throughput whereas sustaining acceptably low latency for person requests.

The advantage of disaggregated inference lies in optimizing what DistServe calls “goodput” as an alternative of “throughput” within the LLM-serving engine.

Right here’s the distinction:

Throughput is measured by the variety of tokens per second that your complete system can generate. Increased throughput means decrease value to generate every token to serve the person. For a very long time, throughput was the one metric utilized by LLM-serving engines to measure their efficiency towards each other.

Whereas throughput measures the mixture efficiency of the system, it doesn’t straight correlate to the latency {that a} person perceives. If a person calls for decrease latency to generate the tokens, the system has to sacrifice throughput.

This pure trade-off between throughput and latency is what led the DistServe crew to suggest a brand new metric, “goodput”: the measure of throughput whereas satisfying the user-specified latency targets, normally referred to as service-level targets. In different phrases, goodput represents the general well being of a system whereas satisfying person expertise.

DistServe reveals that goodput is a significantly better metric for LLM-serving programs, because it components in each value and repair high quality. Goodput results in optimum effectivity and supreme output from a mannequin.

How Can Builders Obtain Optimum Goodput?

When a person makes a request in an LLM system, the system takes the person enter and generates the primary token, referred to as prefill. Then, the system creates quite a few output tokens, one after one other, predicting every token’s future habits based mostly on previous requests’ outcomes. This course of is named decode.

Prefill and decode have traditionally run on the identical GPU, however the researchers behind DistServe discovered that splitting them onto totally different GPUs maximizes goodput.

“Beforehand, for those who put these two jobs on a GPU, they’d compete with one another for sources, which may make it sluggish from a person perspective,” Chen mentioned. “Now, if I break up the roles onto two totally different units of GPUs — one doing prefill, which is compute intensive, and the opposite doing decode, which is extra reminiscence intensive — we will essentially remove the interference between the 2 jobs, making each jobs run sooner.

This course of known as prefill/decode disaggregation, or separating the prefill from decode to get higher goodput.

Growing goodput and utilizing the disaggregated inference technique allows the continual scaling of workloads with out compromising on low-latency or high-quality mannequin responses.

NVIDIA Dynamo — an open-source framework designed to speed up and scale generative AI fashions on the highest effectivity ranges with the bottom value — allows scaling disaggregated inference.

Along with these tasks, cross-departmental collaborations, equivalent to in healthcare and biology, are underway at UC San Diego to additional optimize an array of analysis tasks utilizing the NVIDIA DGX B200, as researchers proceed exploring how AI platforms can speed up innovation.

Study extra in regards to the NVIDIA DGX B200 system.