At this time, OpenAI launched GPT-5.2, touting its stronger security efficiency in regard to psychological well being.

“With this launch, we continued our work to strengthen our fashions’ responses in delicate conversations, with significant enhancements in how they reply to prompts indicating indicators of suicide or self-harm, psychological well being misery, or emotional reliance on the mannequin,” OpenAI’s weblog put up states.

OpenAI has just lately been hit with criticism and lawsuits, which accuse ChatGPT of contributing to some customers’ psychosis, paranoia, and delusions. A few of these customers died by suicide after prolonged conversations with the AI chatbot, which has had a well-documented drawback with sycophancy.

In response to a wrongful demise lawsuit in regards to the suicide of 16-year-old Adam Raine, OpenAI denied that the LLM was accountable, claimed ChatGPT directed {the teenager} to hunt assist for his suicidal ideas, and said that {the teenager} “misused” the platform. On the similar time, OpenAI pledged to enhance how ChatGPT responds when customers show warning indicators of self-harm and psychological well being crises. As many customers develop emotional attachments to AI chatbots like ChatGPT, AI corporations are dealing with rising scrutiny for the safeguards they’ve in place to guard customers.

Now, OpenAI claims that its newest ChatGPT fashions will supply “fewer undesirable responses” in delicate conditions.

Mashable Gentle Velocity

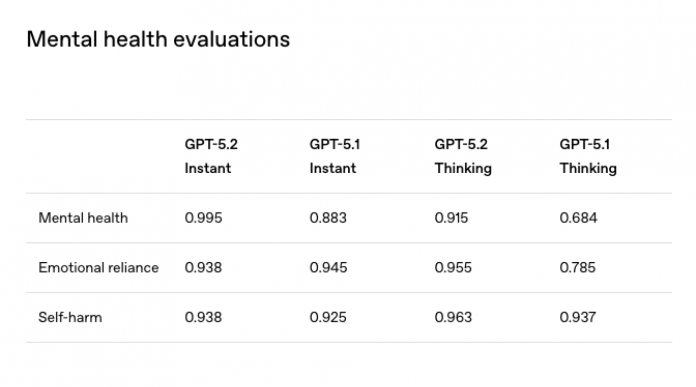

Within the weblog put up saying GPT-5.2, OpenAI states that GPT-5.2 scores greater on security exams associated to psychological well being, emotional reliance, and self-harm in comparison with GPT-5.1 fashions. Beforehand, OpenAI has mentioned it is utilizing “secure completion,” a new safety-training strategy that balances helpfulness and security. Extra info on the brand new fashions’ efficiency might be discovered within the 5.2 system card.

Credit score: Screenshot: OpenAI

Nonetheless, the corporate has additionally noticed that GPT-5.2 refuses fewer requests for mature content material, particularly sexualized textual content. However this apparently would not influence customers OpenAI is aware of to be underage, as the corporate states that its age safeguards “seem like working properly.” OpenAI applies further content material protections for minors, together with lowering entry to content material containing violence, gore, viral challenges, roleplay of sexual, romantic, or violent nature, and “excessive magnificence requirements.”

An age prediction mannequin can also be within the works, which can permit ChatGPT to estimate its customers’ ages to assist present extra age-appropriate content material for youthful customers.

Earlier this fall, OpenAI launched parental controls in ChatGPT, together with monitoring and proscribing sure varieties of use.

OpenAI is not the one AI firm accused of exacerbating psychological well being points. Final 12 months, a mom sued Character.AI after her son’s demise by suicide, and one other lawsuit claims youngsters have been severely harmed by that platform’s “characters.” Character.AI has been declared unsafe for teenagers by on-line security specialists. Likewise, AI chatbots from a wide range of platforms, together with OpenAI, have been declared unsafe for teenagers’ psychological well being based on youngster security and psychological well being specialists.

When you’re feeling suicidal or experiencing a psychological well being disaster, please speak to anyone. You may name or textual content the 988 Suicide & Disaster Lifeline at 988, or chat at 988lifeline.org. You may attain the Trans Lifeline by calling 877-565-8860 or the Trevor Undertaking at 866-488-7386. Textual content “START” to Disaster Textual content Line at 741-741. Contact the NAMI HelpLine at 1-800-950-NAMI, Monday by Friday from 10:00 a.m. – 10:00 p.m. ET, or electronic mail [email protected]. When you do not just like the cellphone, think about using the 988 Suicide and Disaster Lifeline Chat. Here’s a listing of worldwide assets.

Disclosure: Ziff Davis, Mashable’s mum or dad firm, in April filed a lawsuit towards OpenAI, alleging it infringed Ziff Davis copyrights in coaching and working its AI programs.