NVIDIA founder and CEO Jensen Huang took the stage on the Fontainebleau Las Vegas immediately to open CES 2026, declaring that AI is scaling into each area and each machine.

“Computing has been essentially reshaped because of accelerated computing, because of synthetic intelligence,” Huang mentioned. “What meaning is a few $10 trillion or so of the final decade of computing is now being modernized to this new method of doing computing.”

Huang unveiled Rubin, NVIDIA’s first extreme-codesigned, six-chip AI platform now in full manufacturing, and launched Alpamayo, an open reasoning mannequin household for autonomous automobile improvement — a part of a sweeping push to carry AI into each area.

With Rubin, NVIDIA goals to “push AI to the following frontier” whereas slashing the price of producing tokens to roughly one-tenth that of the earlier platform, Huang mentioned, making large-scale AI much more economical to deploy.

Huang additionally emphasised the function of NVIDIA open fashions throughout each area, skilled on NVIDIA supercomputers, forming a world ecosystem of intelligence that builders and enterprises can construct on.

“Each single six months, a brand new mannequin is rising, and these fashions are getting smarter and smarter,” Huang mentioned. “Due to that, you can see the variety of downloads has exploded.”

Discover all NVIDIA information from CES on this on-line press package.

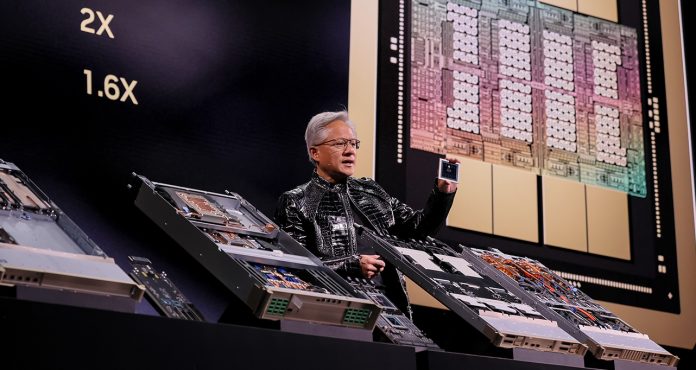

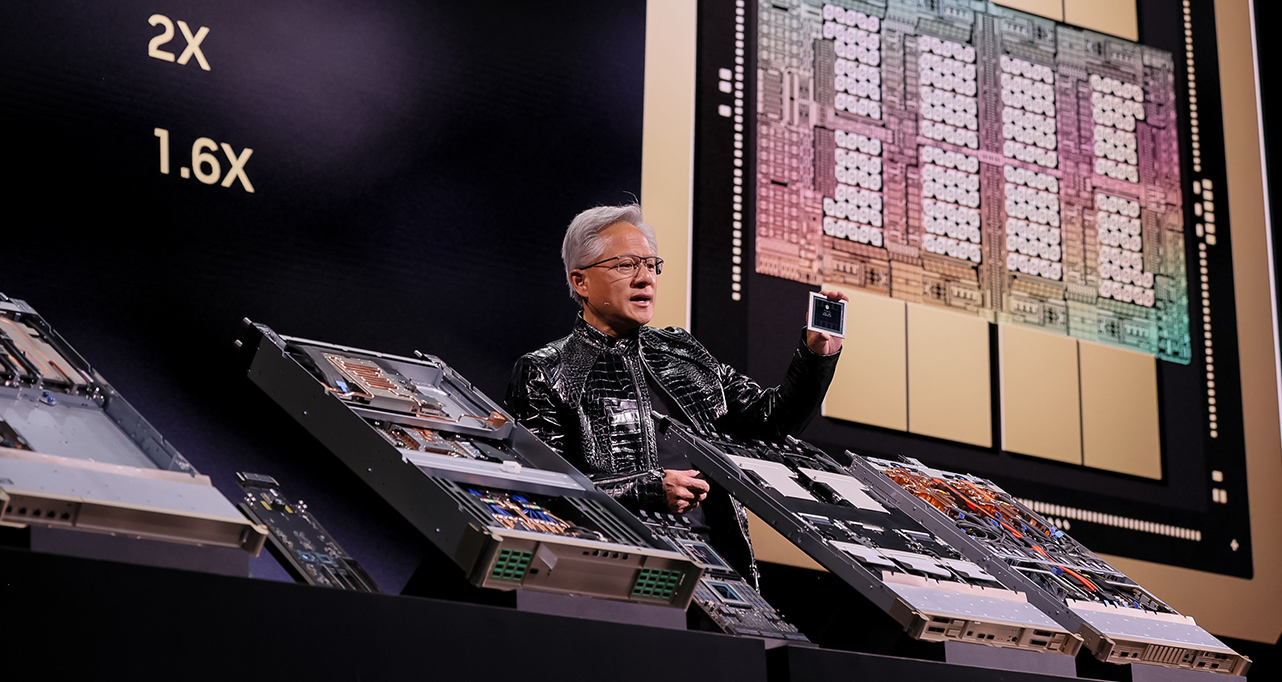

A New Engine for Intelligence: The Rubin Platform

Introducing the viewers to pioneering American astronomer Vera Rubin, after whom NVIDIA named its next-generation computing platform, Huang introduced that the NVIDIA Rubin platform, the successor to the file‑breaking NVIDIA Blackwell structure and the corporate’s first extreme-codesigned, six‑chip AI platform, is now in full manufacturing.

Constructed from the information middle outward, Rubin platform parts span:

- Rubin GPUs with 50 petaflops of NVFP4 inference

- Vera CPUs engineered for knowledge motion and agentic processing

- NVLink 6 scale‑up networking

- Spectrum‑X Ethernet Photonics scale‑out networking

- ConnectX‑9 SuperNICs

- BlueField‑4 DPUs

Excessive codesign — designing all these parts collectively — is crucial as a result of scaling AI to gigascale requires tightly built-in innovation throughout chips, trays, racks, networking, storage and software program to remove bottlenecks and dramatically scale back the prices of coaching and inference, Huang defined.

He additionally launched AI-native storage with NVIDIA Inference Context Reminiscence Storage Platform — an AI‑native KV‑cache tier that reinforces lengthy‑context inference with 5x greater tokens per second, 5x higher efficiency per TCO greenback and 5x higher energy effectivity.

Put all of it collectively and the Rubin platform guarantees to dramatically speed up AI innovation, delivering AI tokens at one-tenth the associated fee. “The quicker you prepare AI fashions, the quicker you will get the following frontier out to the world,” Huang mentioned. “That is your time to market. That is know-how management.”

Open Fashions for All

NVIDIA’s open fashions — traained on NVIDIA’s personal supercomputers — are powering breakthroughs throughout healthcare, local weather science, robotics, embodied intelligence and autonomous driving.

“Now on high of this platform, NVIDIA is a frontier AI mannequin builder, and we construct it in a really particular method. We construct it utterly within the open in order that we will allow each firm, each trade, each nation, to be a part of this AI revolution.”

The portfolio spans six domains — Clara for healthcare, Earth-2 for local weather science, Nemotron for reasoning and multimodal AI, Cosmos for robotics and simulation, GR00T for embodied intelligence and Alpamayo for autonomous driving — making a basis for innovation throughout industries.

“These fashions are open to the world,” Huang mentioned, underscoring NVIDIA’s function as a frontier AI builder with world-class fashions topping leaderboards. “You possibly can create the mannequin, consider it, guardrail it and deploy it.”

AI on Each Desk: RTX, DGX Spark and Private Brokers

Huang emphasised that AI’s future will not be solely about supercomputers — it’s private.

Huang confirmed a demo that includes a customized AI agent operating regionally on the NVIDIA DGX Spark desktop supercomputer and embodied by means of a Reachy Mini robotic utilizing Hugging Face fashions — exhibiting how open fashions, mannequin routing and native execution flip brokers into responsive, bodily collaborators.

“The superb factor is that’s totally trivial now, however but, simply a few years in the past, that might have been unattainable, completely unimaginable,” Huang mentioned.

The world’s main enterprises are integrating NVIDIA AI to energy their merchandise, Huang mentioned, citing corporations together with Palantir, ServiceNow, Snowflake, CodeRabbit, CrowdStrike, NetApp and Semantec.

“Whether or not it’s Palantir or ServiceNow or Snowflake — and lots of different corporations that we’re working with — the agentic system is the interface.”

At CES, NVIDIA additionally introduced that DGX Spark delivers as much as 2.6x efficiency for big fashions, with new assist for Lightricks LTX‑2 and FLUX picture fashions, and upcoming NVIDIA AI Enterprise availability.

Bodily AI

AI is now grounded within the bodily world, by means of NVIDIA’s applied sciences for coaching, inference and edge computing.

These programs could be skilled on artificial knowledge in digital worlds lengthy earlier than interacting with the actual world.

Huang showcased NVIDIA Cosmos open world basis fashions skilled on movies, robotics knowledge and simulation. Cosmos:

- Generates practical movies from a single picture

- Synthesizes multi‑digital camera driving eventualities

- Fashions edge‑case environments from situation prompts

- Performs bodily reasoning and trajectory prediction

- Drives interactive, closed‑loop simulation

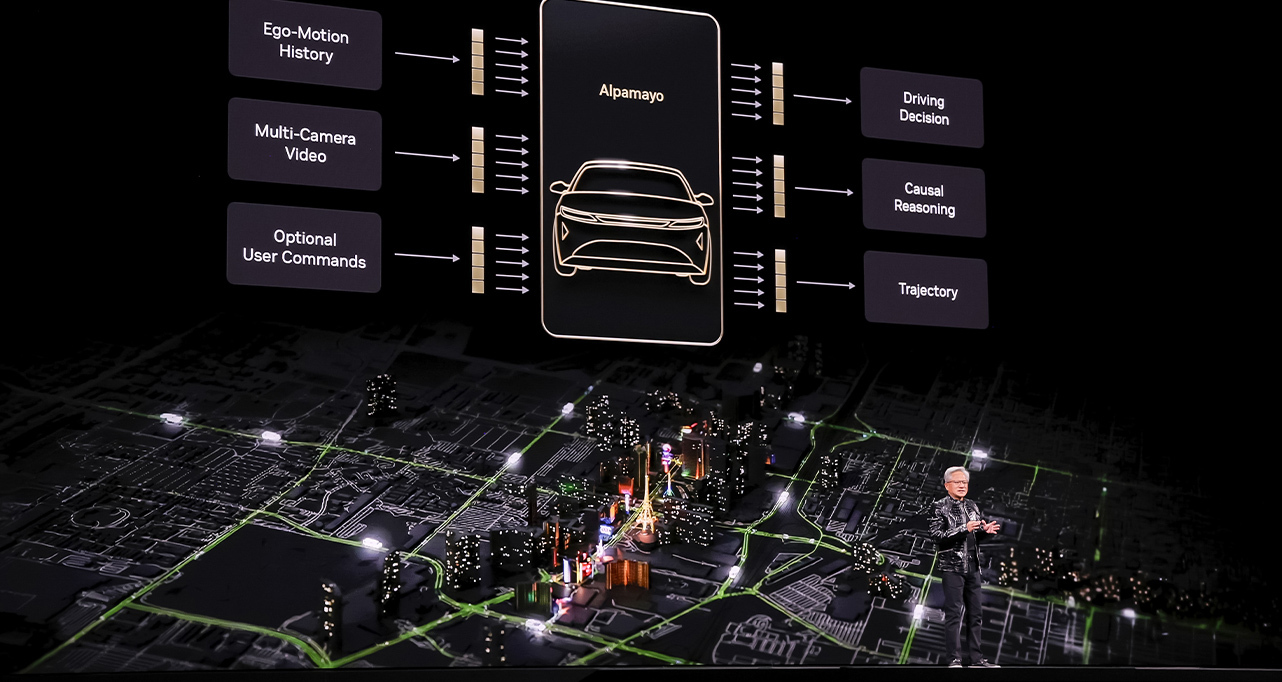

Advancing this story, Huang introduced Alpamayo, an open portfolio of reasoning imaginative and prescient language motion fashions, simulation blueprints and datasets enabling stage 4‑succesful autonomy. This consists of:

- Alpamayo R1 — the primary open, reasoning VLA mannequin for autonomous driving

- AlpaSim — a totally open simulation blueprint for top‑constancy AV testing

“Not solely does it take sensor enter and prompts steering wheel, brakes and acceleration, it additionally causes about what motion it’s about to take,” Huang mentioned, teeing up a video exhibiting a automobile easily navigating busy San Francisco site visitors.

Huang introduced the primary passenger automotive that includes Alpamayo constructed on NVIDIA DRIVE full-stack autonomous automobile platform shall be on the roads quickly within the all‑new Mercedes‑Benz CLA — with AI‑outlined driving coming to the U.S. this 12 months, and follows the CLA’s current EuroNCAP 5‑star security ranking.

Huang additionally highlighted rising momentum behind DRIVE Hyperion, the open, modular, stage‑4‑prepared platform adopted by main automakers, suppliers and robotaxi suppliers worldwide.

“Our imaginative and prescient is that, sometime, each single automotive, each single truck shall be autonomous, and we’re working towards that future,” Huang mentioned.

Huang was then joined on stage by a pair of tiny beeping, booping, hopping robots as he defined how NVIDIA’s full‑stack strategy is fueling a world bodily AI ecosystem.

Huang rolled a video exhibiting how robots are skilled in NVIDIA Isaac Sim and Isaac Lab in photorealistic, simulated worlds — earlier than highlighting the work of companions in bodily AI throughout the trade, together with Synopsis and Cadence, Boston Dynamics and Franka, and extra.

He additionally introduced an expanded partnership with Siemens, supported by a montage exhibiting how NVIDIA’s full stack integrates with Siemens’ industrial software program, enabling bodily AI from design and simulation by means of manufacturing.

“These manufacturing crops are going to be basically large robots,” Huang mentioned.

Constructing the Future, Collectively

Huang defined that NVIDIA builds complete programs now as a result of it takes a full, optimized stack to ship AI breakthroughs.

“Our job is to create your complete stack so that every one of you possibly can create unbelievable purposes for the remainder of the world,” he mentioned.

Watch the complete presentation replay: