NVIDIA Blackwell’s scale-up capabilities set the stage to scale out the world’s largest AI factories.

The NVIDIA Blackwell structure is the reigning chief of the AI revolution.

Many consider Blackwell as a chip, however it might be higher to consider it as a platform powering large-scale AI infrastructure.

Surging Demand and Mannequin Complexity

Blackwell is the core of a complete system structure designed particularly to energy AI factories that produce intelligence utilizing the biggest and most complicated AI fashions.

In the present day’s frontier AI fashions have lots of of billions of parameters and are estimated to serve almost a billion customers per week. The following era of fashions are anticipated to have effectively over a trillion parameters — and are being skilled on tens of trillions of tokens of knowledge drawn from textual content, picture and video datasets.

Scaling out an information heart — harnessing as much as hundreds of computer systems to share the work — is important to fulfill this demand. However far better efficiency and vitality effectivity can come from first scaling up: by making a much bigger laptop.

Blackwell redefines the boundaries of simply how massive we are able to go.

Exponential progress of parameters in notable AI fashions over time.

Information Supply: Epoch (2025), with main processing by Our World In Information

In the present day’s Most Difficult Type of Computing

AI factories are the machines of the subsequent industrial revolution. Their work is AI inference — essentially the most difficult type of computing identified right this moment — and their product is intelligence.

These factories require infrastructure that may adapt, scale out and maximize each little bit of compute useful resource obtainable.

What does that seem like?

A symphony of compute, networking, storage, energy and cooling — with integration on the silicon and methods ranges, up and down racks — orchestrated by software program that sees tens of hundreds of Blackwell GPUs as one.

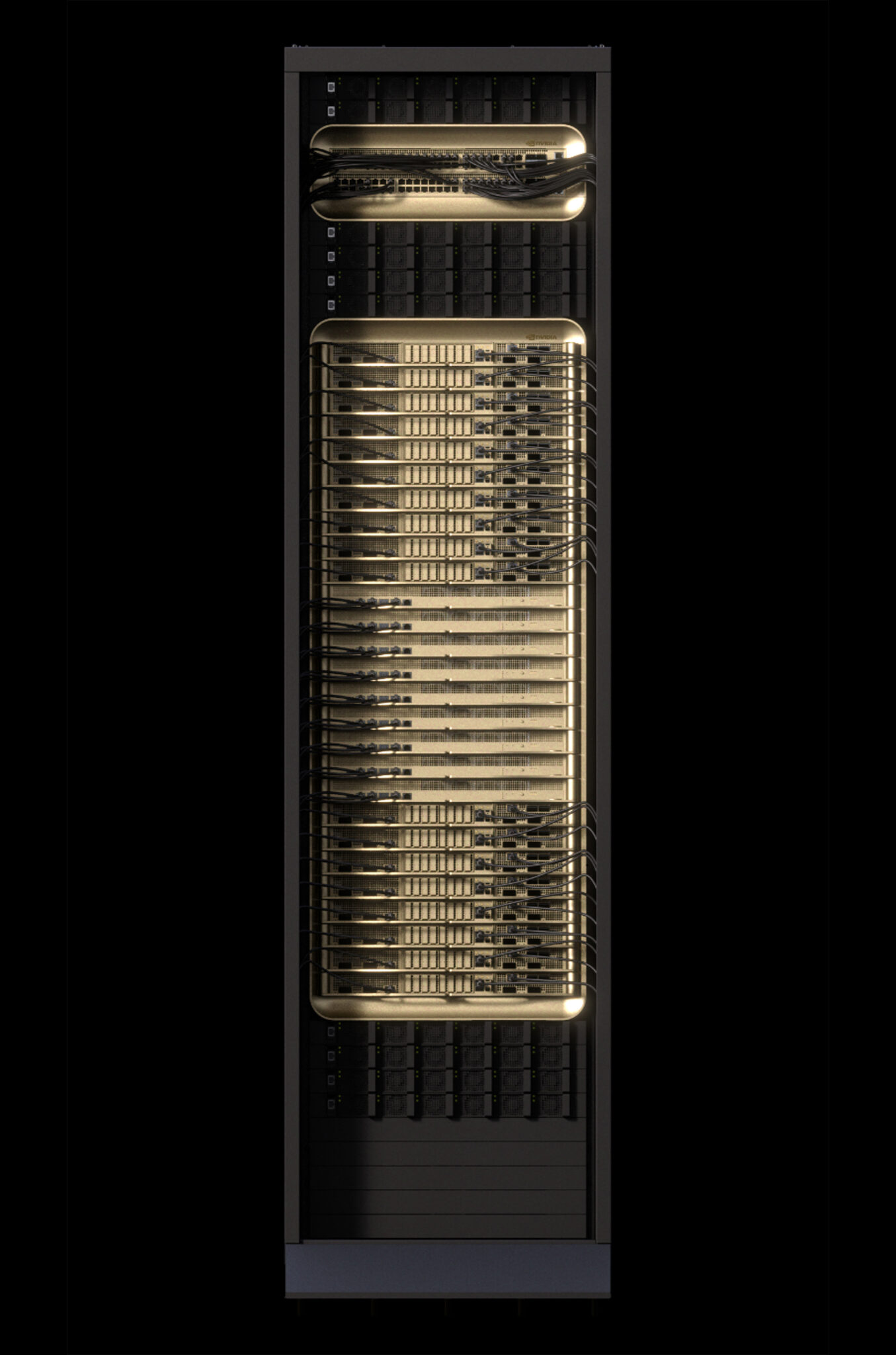

The brand new unit of the information heart is NVIDIA GB200 NVL72, a rack-scale system that acts as a single, huge GPU.

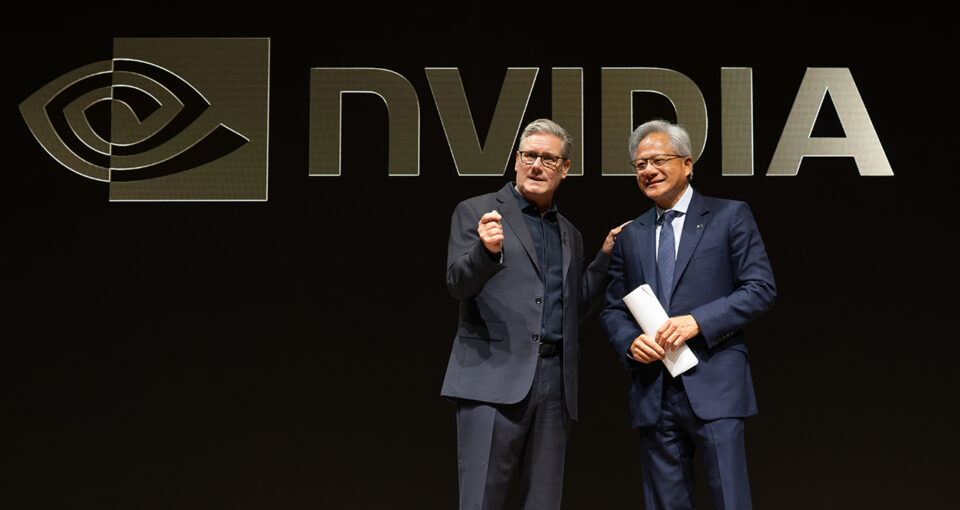

NVIDIA CEO Jensen Huang exhibits off the NVIDIA GB200 NVL72 system and the NVIDIA Grace Blackwell superchip throughout his keynote at CES 2025.

Beginning of a Superchip

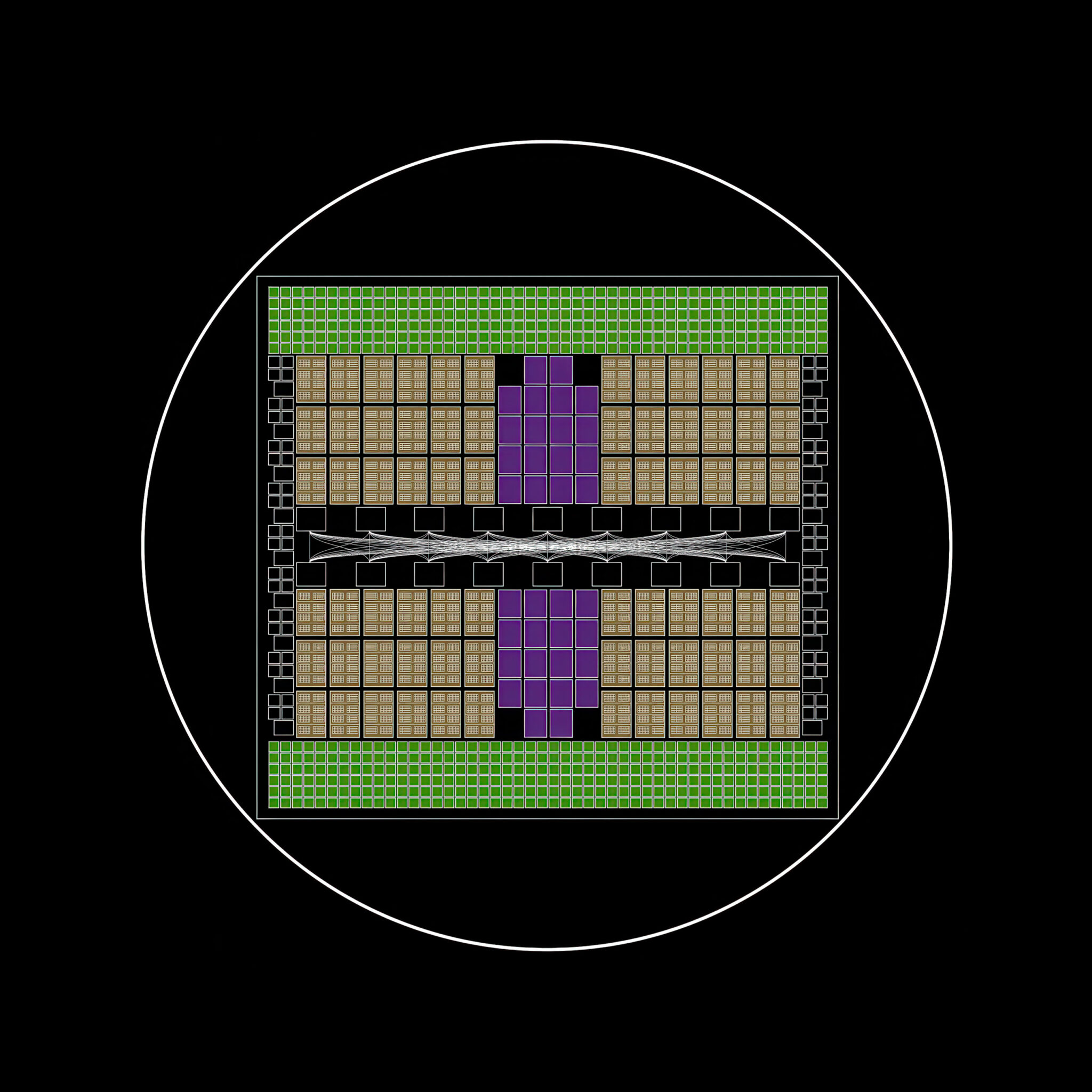

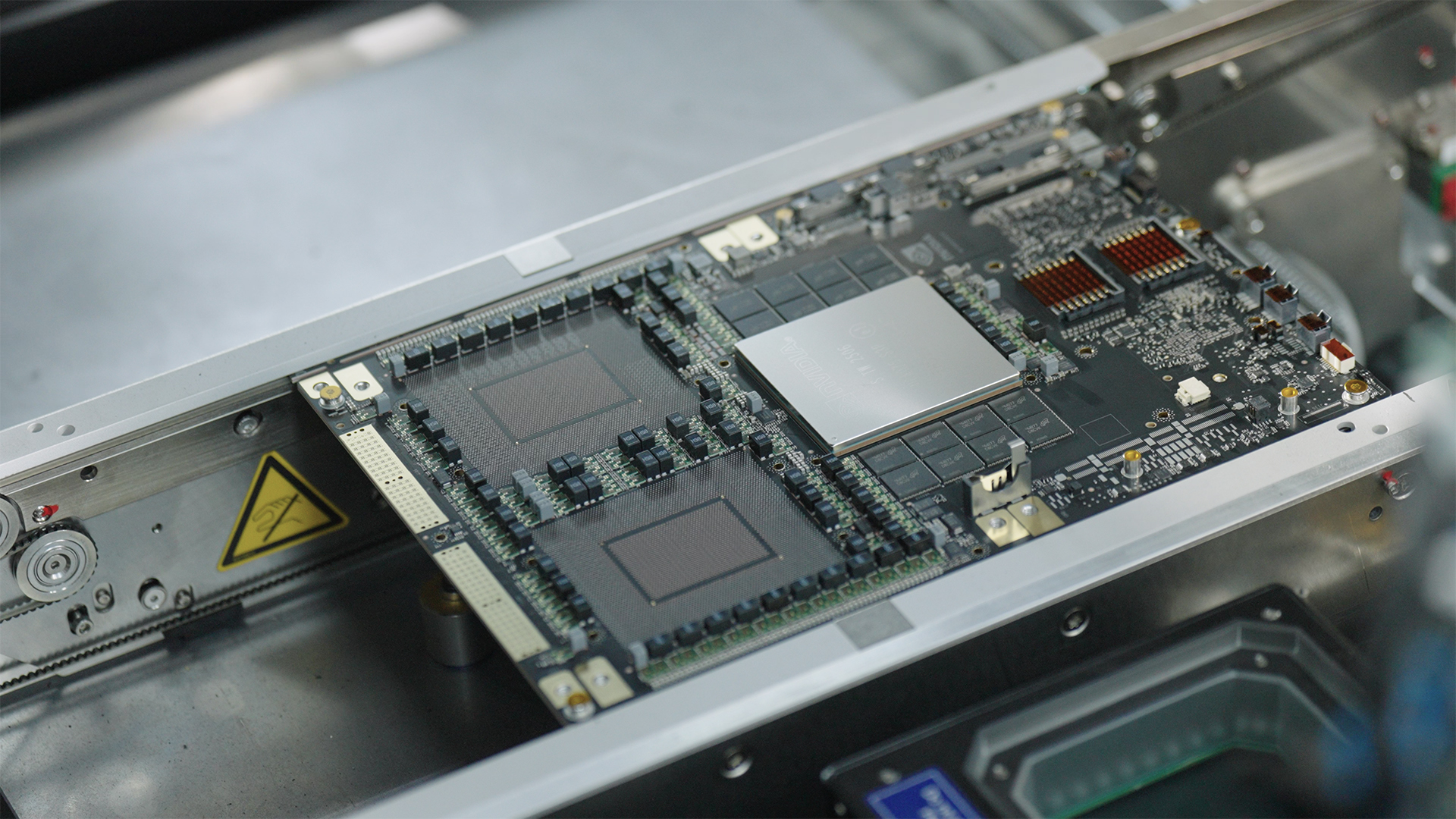

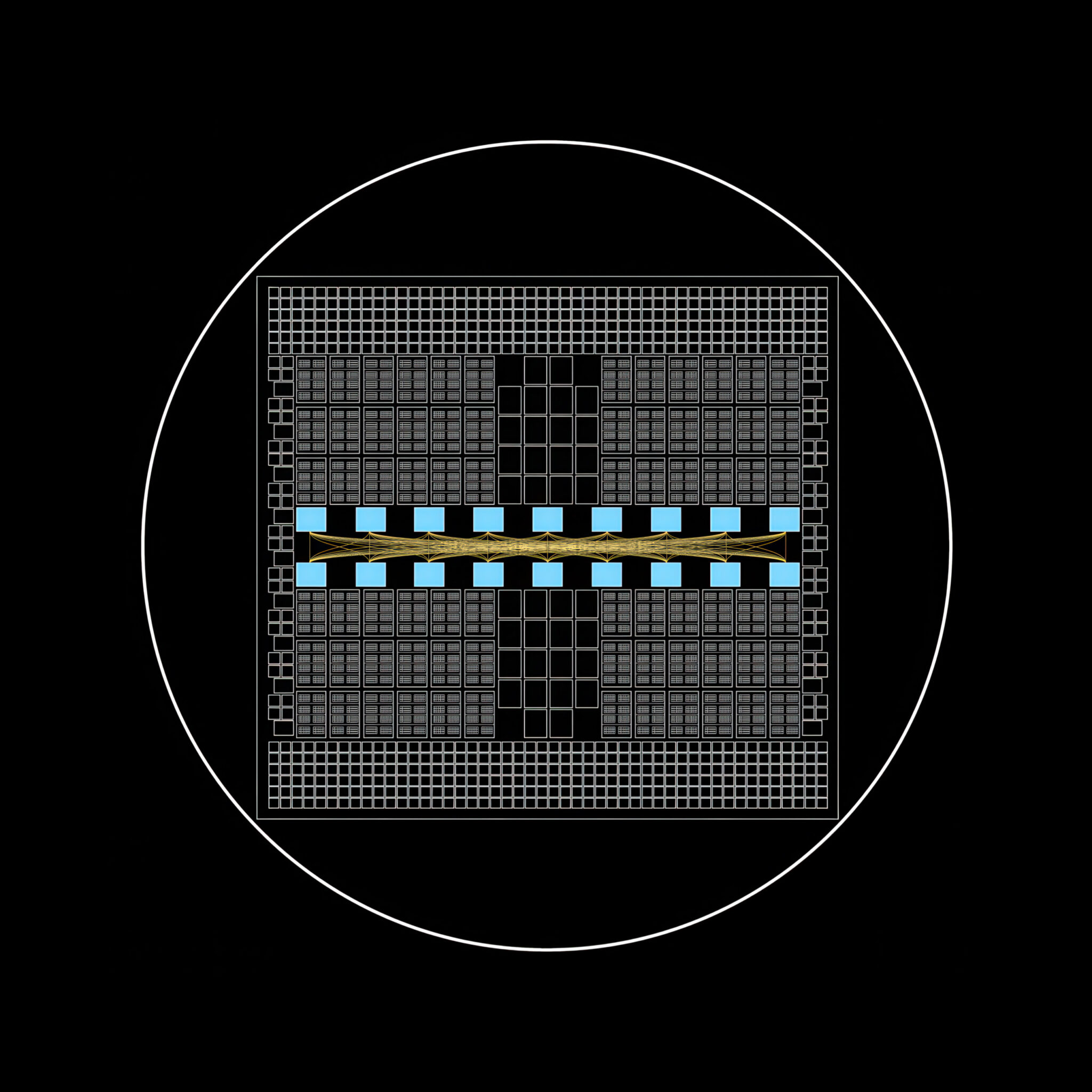

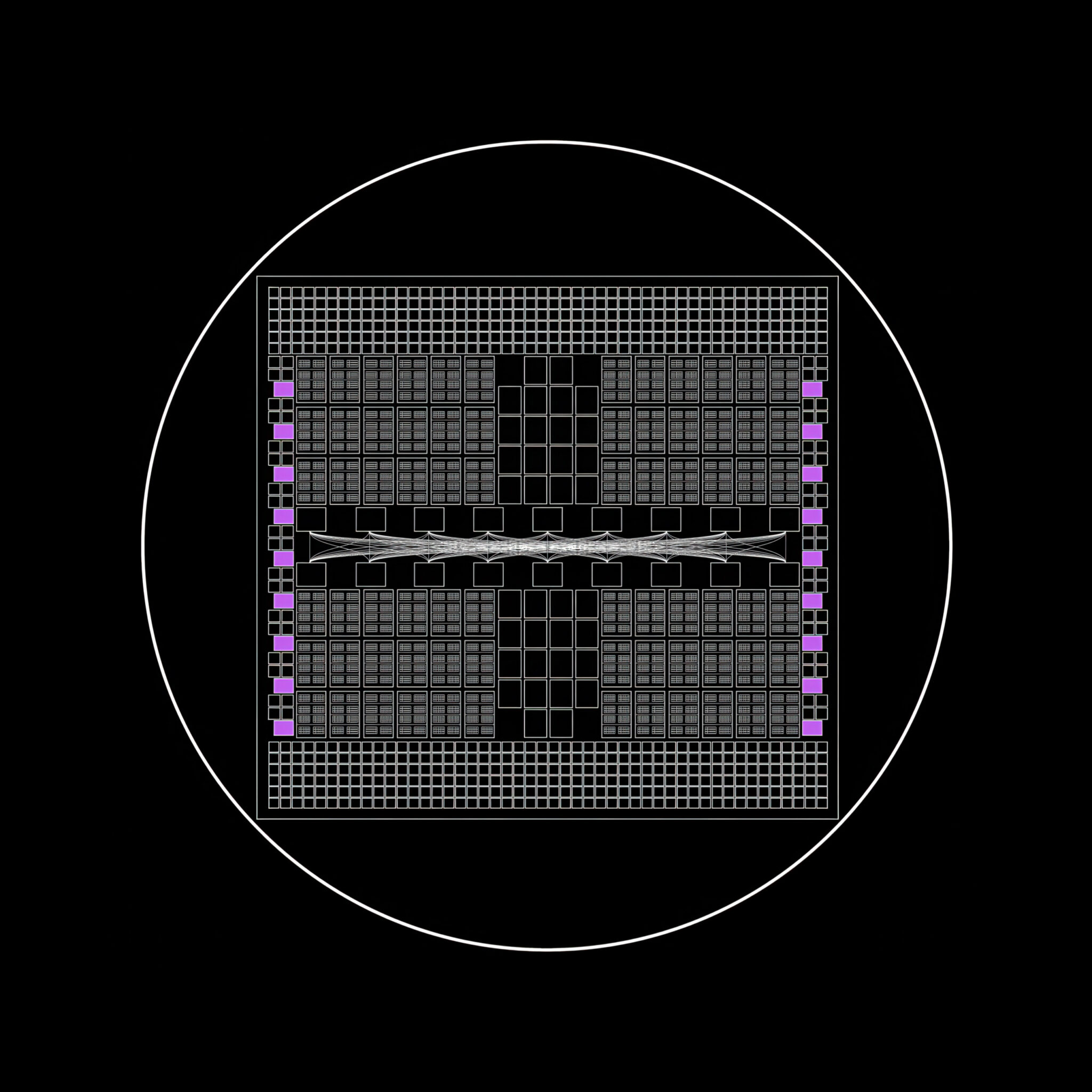

On the core, the NVIDIA Grace Blackwell superchip unites two Blackwell GPUs with one NVIDIA Grace CPU.

Fusing them right into a unified compute module — a superchip — boosts efficiency by an order of magnitude. To take action requires a brand new high-speed interconnect know-how launched with the NVIDIA Hopper structure: NVIDIA NVLink chip-to-chip.

This know-how unlocks seamless communication between the CPU and GPUs, enabling them to share reminiscence straight, leading to decrease latency and better throughput for AI workloads.

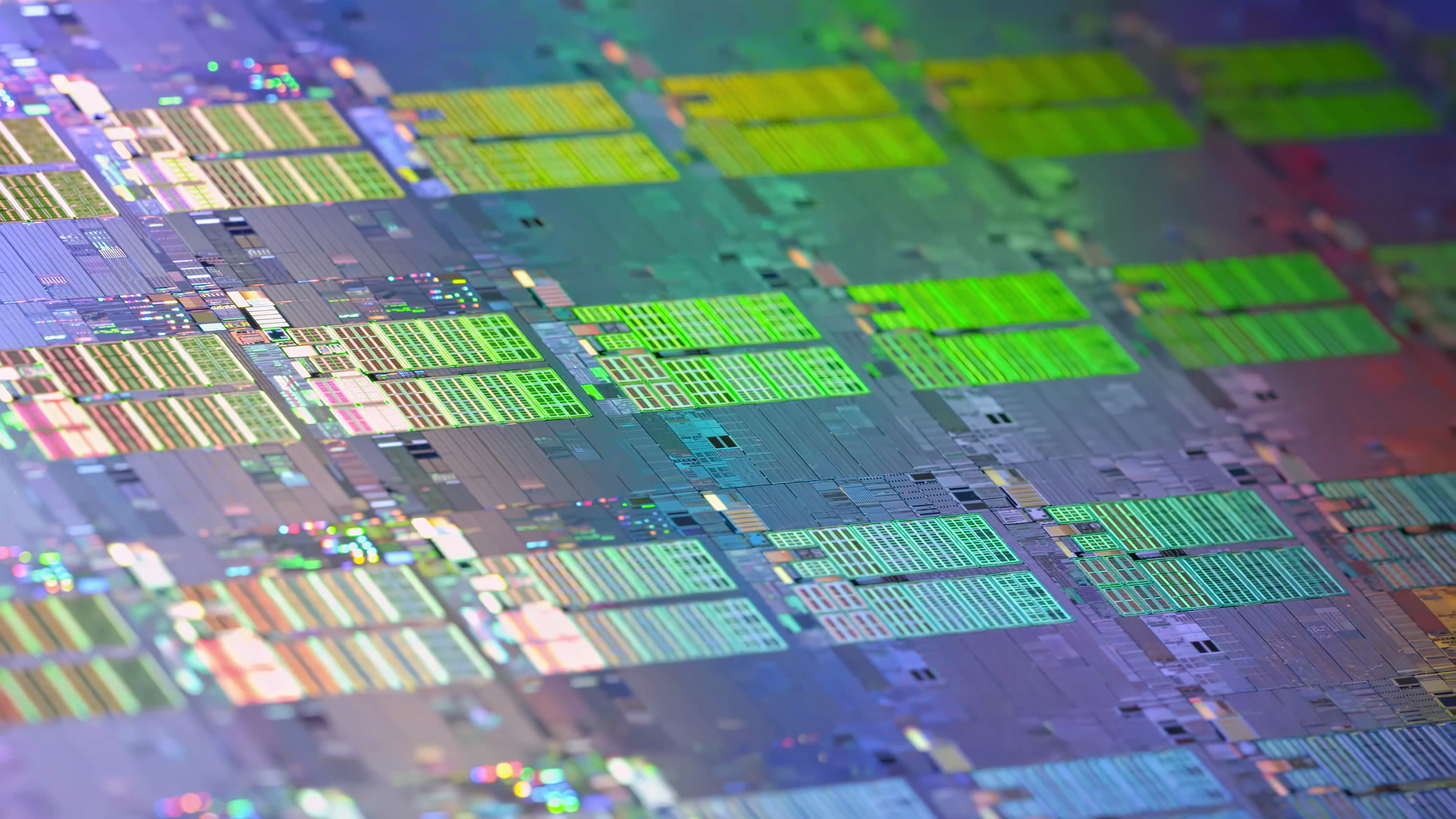

It takes a symphony of creation, slicing, meeting and inspection to construct a superchip.

A New Interconnect for the Superchip Period

Scaling this efficiency throughout a number of superchips with out bottlenecks was unattainable with earlier networking know-how. So NVIDIA created a brand new type of interconnect to maintain efficiency bottlenecks from rising and allow AI at scale.

A Spine That Clears Bottlenecks

The NVIDIA NVLink Change backbone anchors GB200 NVL72 with a exactly engineered internet of over 5,000 high-performance copper cables, connecting 72 GPUs throughout 18 compute trays to maneuver knowledge at a staggering 130 TB/s.

That’s quick sufficient to switch the whole web’s peak visitors in lower than a second.

Two miles of copper wire is exactly minimize, measured, assembled and examined to create the blisteringly quick NVIDIA NVLink Change backbone.

The backbone cartridge is inspected earlier than set up.

The backbone, powered up, can transfer a complete web’s price of knowledge in lower than a second.

Constructing One Big GPU for Inference

The mixing of all this superior {hardware} and software program, compute and networking permits GB200 NVL72 methods to unlock new prospects for AI at scale.

Every rack weighs one-and-a-half tons — that includes greater than 600,000 components, two miles of wire and thousands and thousands of strains of code converged.

It acts as one large digital GPU, making factory-scale AI inference doable, the place each nanosecond and watt issues.

GB200 NVL72 In every single place

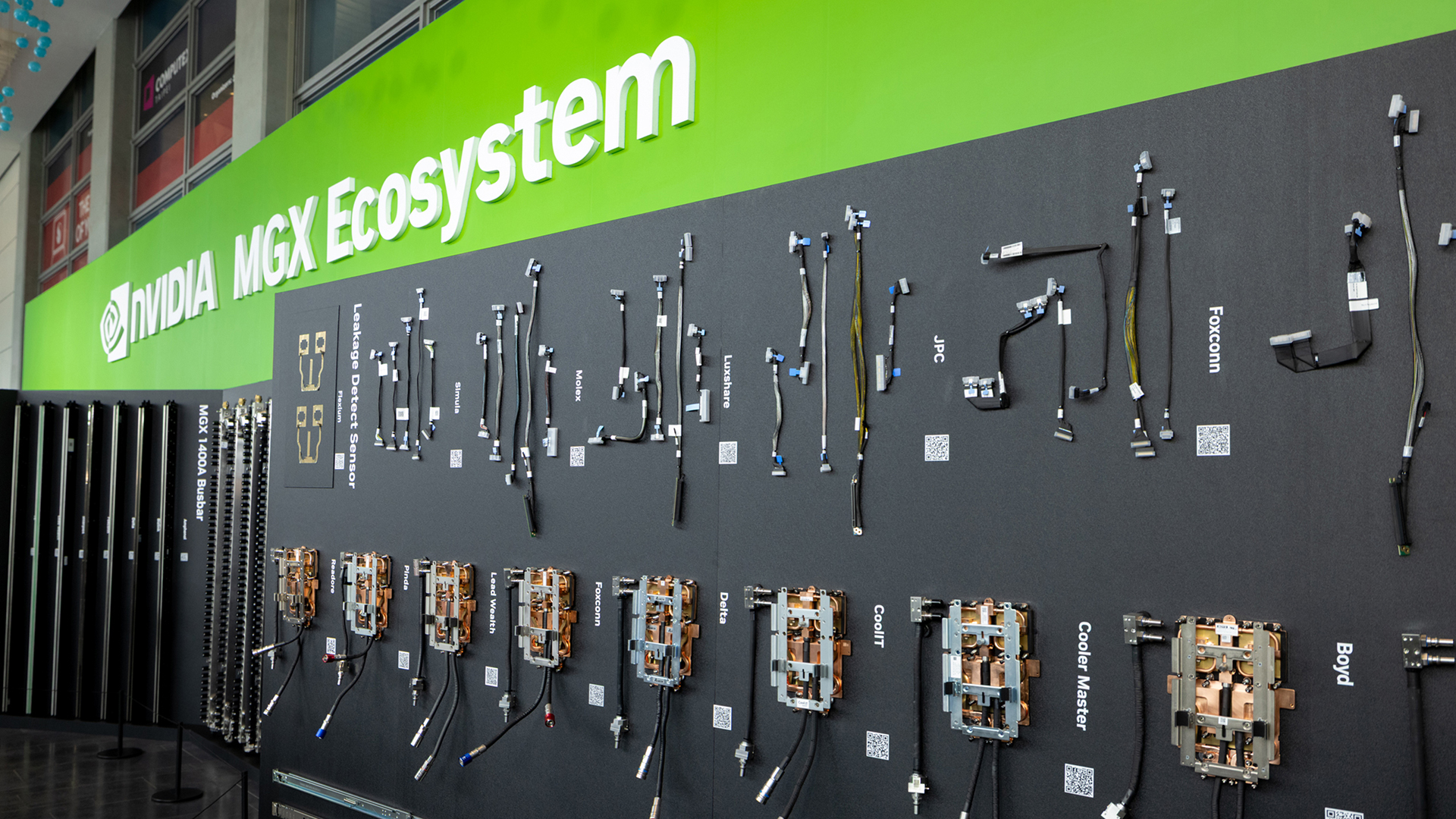

NVIDIA then deconstructed GB200 NVL72 in order that companions and clients can configure and construct their very own NVL72 methods.

Every NVL72 system is a two-ton, 1.2-million-part supercomputer. NVL72 methods are manufactured throughout greater than 150 factories worldwide with 200 know-how companions.

From cloud giants to system builders, companions worldwide are producing NVIDIA Blackwell NVL72 methods.

Time to Scale Out

Tens of hundreds of Blackwell NVL72 methods converge to create AI factories.

Working collectively isn’t sufficient. They have to work as one.

NVIDIA Spectrum-X Ethernet and Quantum-X800 InfiniBand switches make this unified effort doable on the knowledge heart degree.

Every GPU in an NVL72 system is related on to the manufacturing unit’s knowledge community, and to each different GPU within the system. GB200 NVL72 methods supply 400 Gbps of Ethernet or InfiniBand interconnect utilizing NVIDIA ConnectX-7 NICs.

NVIDIA Quantum-X800 Change, NVLink Change, and Spectrum-X Ethernet unify one or many NVL72 methods to operate as one.

Opening Traces of Communication

Scaling out AI factories requires many instruments, every in service of 1 factor: unrestricted, parallel communication for each AI workload within the manufacturing unit.

NVIDIA BlueField-3 DPUs do their half to spice up AI efficiency by offloading and accelerating the non-AI duties that preserve the manufacturing unit operating: the symphony of networking, storage and safety.

NVIDIA GB200 NVL72 powers an AI manufacturing unit by CoreWeave, an NVIDIA Cloud Companion.

The AI Manufacturing facility Working System

The info heart is now the pc. NVIDIA Dynamo is its working system.

Dynamo orchestrates and coordinates AI inference requests throughout a big fleet of GPUs to make sure that AI factories run on the lowest doable value to maximise productiveness and income.

It might add, take away and shift GPUs throughout workloads in response to surges in buyer use, and route queries to the GPUs finest match for the job.

Colossus, xAI’s AI supercomputer. Created in 122 days, it homes over 200,000 NVIDIA GPUs — an instance of a full-stack, scale-out structure.

Blackwell is greater than a chip. It’s the engine of AI factories.

The world’s largest-planned computing clusters are being constructed on the Blackwell and Blackwell Extremely architectures — with roughly 1,000 racks of NVIDIA GB300 methods produced every week.

Associated Information