Curiosity in generative AI is constant to develop, as new fashions embody extra capabilities. With the most recent developments, even fans with no developer background can dive proper into tapping these fashions.

With common purposes like Langflow — a low-code, visible platform for designing customized AI workflows — AI fans can use easy, no-code person interfaces (UIs) to chain generative AI fashions. And with native integration for Ollama, customers can now create native AI workflows and run them for free of charge and with full privateness, powered by NVIDIA GeForce RTX and RTX PRO GPUs.

Visible Workflows for Generative AI

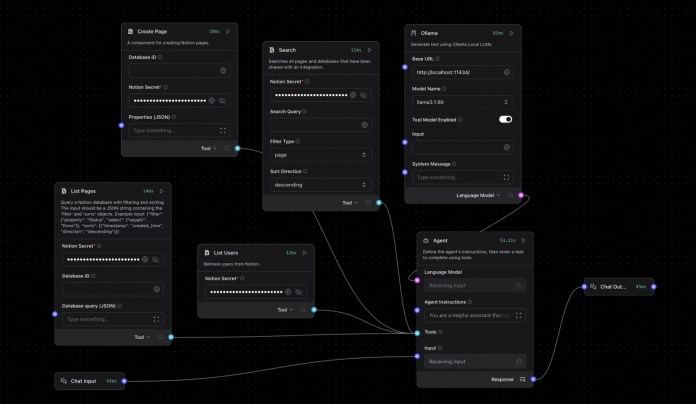

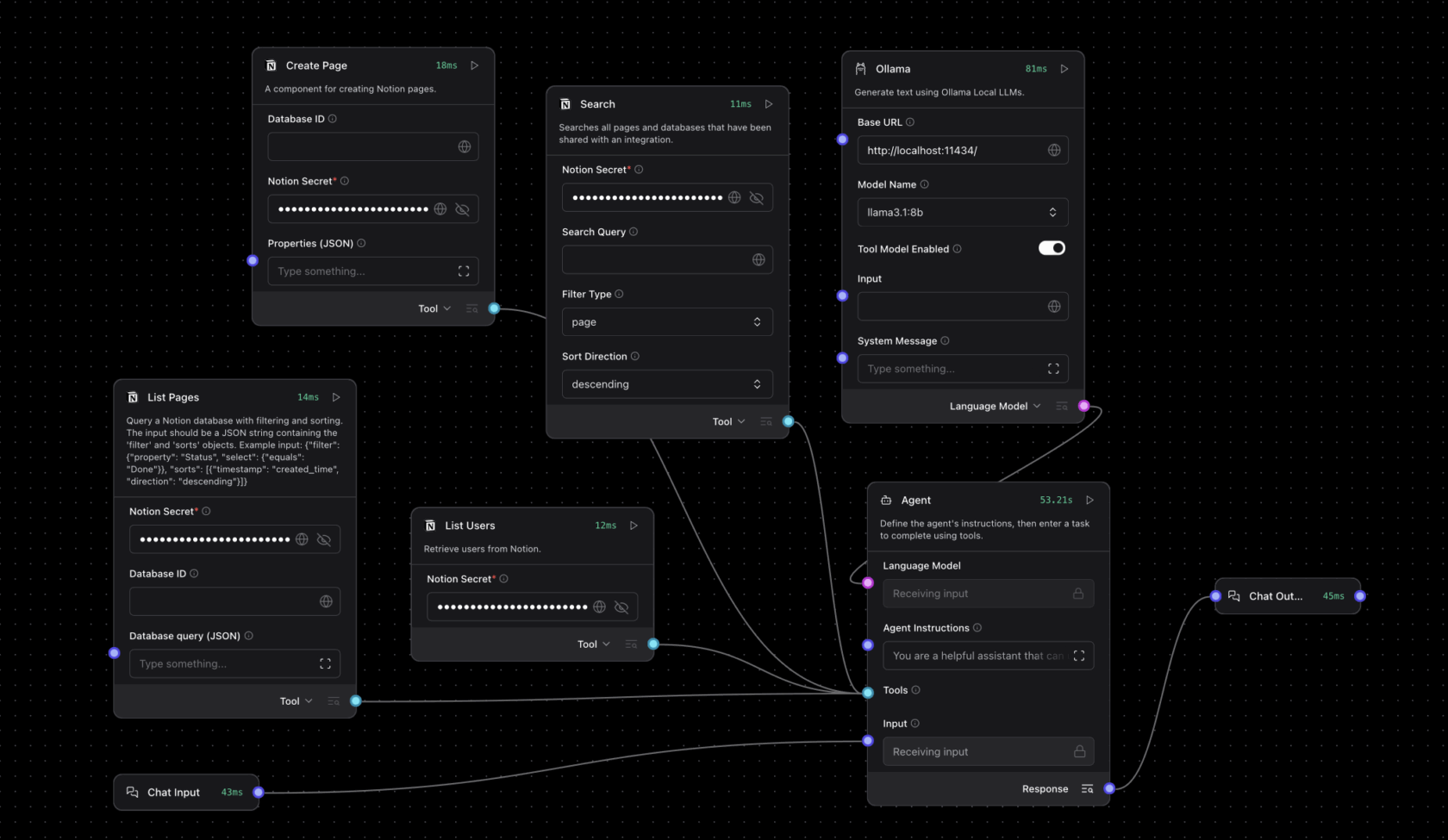

Langflow gives an easy-to-use, canvas-style interface the place elements of generative AI fashions — like giant language fashions (LLMs), instruments, reminiscence shops and management logic — might be related by a easy drag-and-drop UI.

This permits advanced AI workflows to be constructed and modified with out handbook scripting, easing the event of brokers able to decision-making and multistep actions. AI fans can iterate and construct advanced AI workflows with out prior coding experience.

In contrast to apps restricted to working a single-turn LLM question, Langflow can construct superior AI workflows that behave like clever collaborators, able to analyzing information, retrieving data, executing capabilities and responding contextually to dynamic inputs.

Langflow can run fashions from the cloud or domestically — with full acceleration for RTX GPUs by Ollama. Operating workflows domestically supplies a number of key advantages:

- Knowledge privateness: Inputs, information and prompts stay confined to the machine.

- Low prices and no API keys: As cloud software programming interface entry is just not required, there aren’t any token restrictions, service subscriptions or prices related to working the AI fashions.

- Efficiency: RTX GPUs allow low-latency, high-throughput inference, even with lengthy context home windows.

- Offline performance: Native AI workflows are accessible with out the web.

Creating Native Brokers With Langflow and Ollama

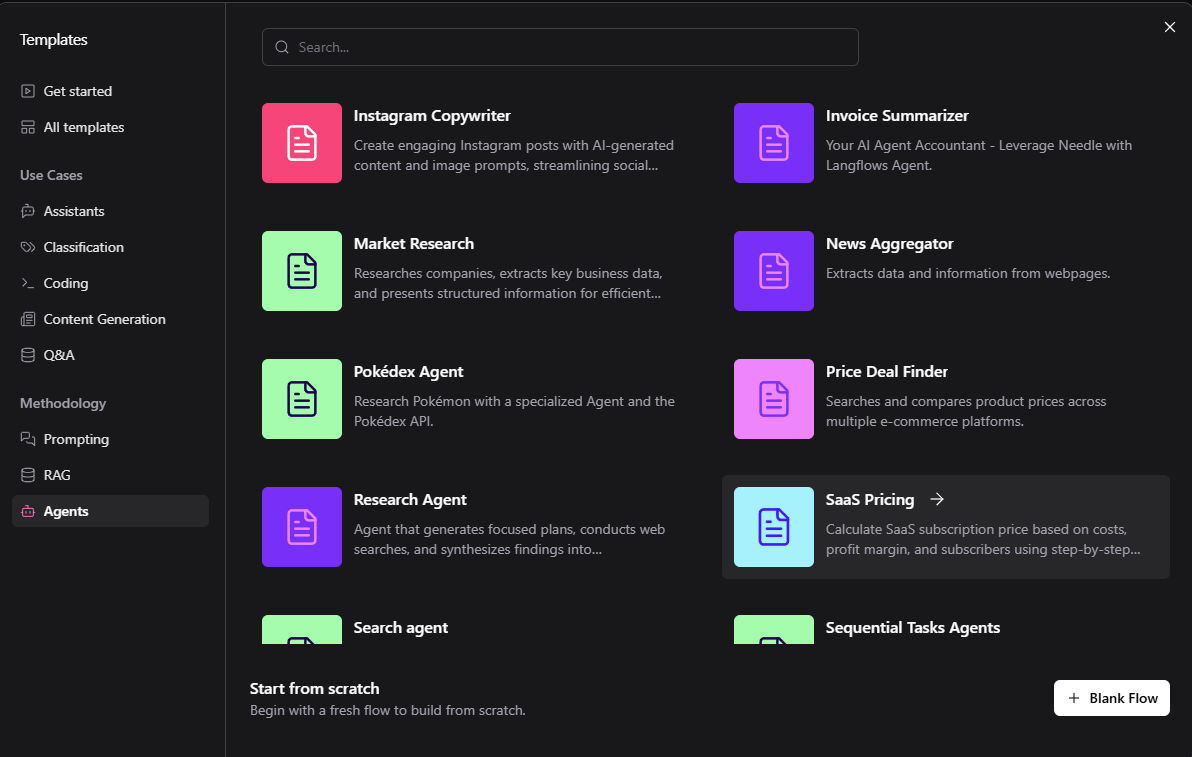

Getting began with Ollama inside Langflow is easy. Constructed-in starters can be found to be used instances starting from journey brokers to buy assistants. The default templates sometimes run within the cloud for testing, however they are often personalized to run domestically on RTX GPUs with Langflow.

To construct an area workflow:

- Set up the Langflow desktop app for Home windows.

- Set up Ollama, then run Ollama and launch the popular mannequin (Llama 3.1 8B or Qwen3 4B really helpful for customers’ first workflow).

- Run Langflow and choose a starter.

- Substitute cloud endpoints with native Ollama runtime. For agentic workflows, set the language mannequin to Customized, drag an Ollama node to the canvas and join the agent node’s customized mannequin to the Language Mannequin output of the Ollama node.

Templates might be modified and expanded — equivalent to by including system instructions, native file search or structured outputs — to satisfy superior automation and assistant use instances.

Watch this step-by-step walkthrough from the Langflow staff:

Get Began

Beneath are two pattern tasks to begin exploring.

Create a private journey itinerary agent: Enter all journey necessities — together with desired restaurant reservations, vacationers’ dietary restrictions and extra — to mechanically discover and prepare lodging, transport, meals and leisure.

Develop Notion’s capabilities: Notion, an AI workspace software for organizing tasks, might be expanded with AI fashions that mechanically enter assembly notes, replace the standing of tasks based mostly on Slack chats or e-mail, and ship out undertaking or assembly summaries.

RTX Remix Provides Mannequin Context Protocol, Unlocking Agent Mods

RTX Remix — an open-source platform that enables modders to boost supplies with generative AI instruments and create gorgeous RTX remasters that characteristic full ray tracing and neural rendering applied sciences — is including help for Mannequin Context Protocol (MCP) with Langflow.

Langflow nodes with MCP give customers a direct interface for working with RTX Remix — enabling modders to construct modding assistants able to intelligently interacting with Remix documentation and mod capabilities.

To assist modders get began, NVIDIA’s Langflow Remix template consists of:

- A retrieval-augmented era module with RTX Remix documentation.

- Actual-time entry to Remix documentation for Q&A-style help.

- An motion nodule by way of MCP that helps direct perform execution inside RTX Remix, together with asset alternative, metadata updates and automatic mod interactions.

Modding assistant brokers constructed with this template can decide whether or not a question is informational or action-oriented. Based mostly on context, brokers dynamically reply with steerage or take the requested motion. For instance, a person would possibly immediate the agent: “Swap this low-resolution texture with a higher-resolution model.” In response, the agent would test the asset’s metadata, find an acceptable alternative and replace the undertaking utilizing MCP capabilities — with out requiring handbook interplay.

Documentation and setup directions for the Remix template can be found within the RTX Remix developer information.

Management RTX AI PCs With Challenge G-Help in Langflow

NVIDIA Challenge G-Help is an experimental, on-device AI assistant that runs domestically on GeForce RTX PCs. It permits customers to question system data (e.g. PC specs, CPU/GPU temperatures, utilization), modify system settings and extra — all by easy pure language prompts.

With the G-Help element in Langflow, these capabilities might be constructed into customized agentic workflows. Customers can immediate G-Help to “get GPU temperatures” or “tune fan speeds” — and its response and actions will move by their chain of elements.

Past diagnostics and system management, G-Help is extensible by way of its plug-in structure, which permits customers so as to add new instructions tailor-made to their workflows. Group-built plug-ins will also be invoked immediately from Langflow workflows.

To get began with the G-Help element in Langflow, learn the developer documentation.

Langflow can be a improvement device for NVIDIA NeMo microservices, a modular platform for constructing and deploying AI workflows throughout on-premises or cloud Kubernetes environments.

With built-in help for Ollama and MCP, Langflow gives a sensible no-code platform for constructing real-time AI workflows and brokers that run totally offline and on machine, all accelerated by NVIDIA GeForce RTX and RTX PRO GPUs.

Every week, the RTX AI Storage weblog collection options community-driven AI improvements and content material for these trying to study extra about NVIDIA NIM microservices and AI Blueprints, in addition to constructing AI brokers, artistic workflows, productiveness apps and extra on AI PCs and workstations.

Plug in to NVIDIA AI PC on Fb, Instagram, TikTok and X — and keep knowledgeable by subscribing to the RTX AI PC e-newsletter. Be a part of NVIDIA’s Discord server to attach with neighborhood builders and AI fans for discussions on what’s doable with RTX AI.

Observe NVIDIA Workstation on LinkedIn and X.

See discover concerning software program product data.