Coaching a language mannequin is memory-intensive, not solely as a result of the mannequin itself is giant but in addition as a result of the lengthy sequences within the coaching knowledge batches. Coaching a mannequin with restricted reminiscence is difficult. On this article, you’ll be taught strategies that allow mannequin coaching in memory-constrained environments. Specifically, you’ll study:

- Low-precision floating-point numbers and mixed-precision coaching

- Utilizing gradient checkpointing

Let’s get began!

Coaching a Mannequin with Restricted Reminiscence utilizing Combined Precision and Gradient Checkpointing

Picture by Meduana. Some rights reserved.

Overview

This text is split into three components; they’re:

- Floating-point Numbers

- Computerized Combined Precision Coaching

- Gradient Checkpointing

Let’s get began!

Floating-Level Numbers

The default knowledge kind in PyTorch is the IEEE 754 32-bit floating-point format, also referred to as single precision. It isn’t the one floating-point kind you should utilize. For instance, most CPUs assist 64-bit double-precision floating-point, and GPUs typically assist half-precision floating-point as nicely. The desk beneath lists some floating-point sorts:

| Information Kind | PyTorch Kind | Whole Bits | Signal Bit | Exponent Bits | Mantissa Bits | Min Worth | Max Worth | eps |

|---|---|---|---|---|---|---|---|---|

| IEEE 754 double precision | torch.float64 |

64 | 1 | 11 | 52 | -1.79769e+308 | 1.79769e+308 | 2.22045e-16 |

| IEEE 754 single precision | torch.float32 |

32 | 1 | 8 | 23 | -3.40282e+38 | 3.40282e+38 | 1.19209e-07 |

| IEEE 754 half precision | torch.float16 |

16 | 1 | 5 | 10 | -65504 | 65504 | 0.000976562 |

| bf16 | torch.bfloat16 |

16 | 1 | 8 | 7 | -3.38953e+38 | 3.38953e+38 | 0.0078125 |

| fp8 (e4m3) | torch.float8_e4m3fn |

8 | 1 | 4 | 3 | -448 | 448 | 0.125 |

| fp8 (e5m2) | torch.float8_e5m2 |

8 | 1 | 5 | 2 | -57344 | 57344 | 0.25 |

| fp8 (e8m0) | torch.float8_e8m0fnu |

8 | 1 | 8 | 0 | 1.70141e+38 | 5.87747e-39 | 1.0 |

| fp6 (e3m2) | 6 | 1 | 3 | 2 | -28 | 28 | 0.25 | |

| fp6 (e2m3) | 6 | 1 | 2 | 3 | -7.5 | 7.5 | 0.125 | |

| fp4 (e2m1) | 4 | 1 | 2 | 1 | -6 | 6 |

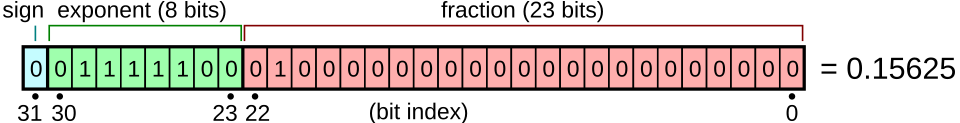

Floating-point numbers are binary representations of actual numbers. Every consists of an indication bit, a number of bits for the exponent, and a number of other bits for the mantissa. They’re laid out as proven within the determine beneath. When sorted by their binary illustration, floating-point numbers retain their order by real-number worth.

Floating-point quantity illustration. Determine from Wikimedia.

Completely different floating-point sorts have totally different ranges and precisions. Not all sorts are supported by all {hardware}. For instance, fp4 is just supported in Nvidia’s Blackwell structure. PyTorch helps only some knowledge sorts. You possibly can run the next code to print details about numerous floating-point sorts:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

import torch from tabulate import tabulate

# float sorts: float_types = [ torch.float64, torch.float32, torch.float16, torch.bfloat16, torch.float8_e4m3fn, torch.float8_e5m2, torch.float8_e8m0fnu, ]

# gather finfo for every kind desk = [] for dtype in float_types: information = torch.finfo(dtype) attempt: typename = information.dtype besides: typename = str(dtype) desk.append([typename, info.max, info.min, info.smallest_normal, info.eps])

headers = [‘data type’, ‘max’, ‘min’, ‘smallest normal’, ‘eps’] print(tabulate(desk, headers=headers)) |

Take note of the min and max values for every kind, in addition to the eps worth. The min and max values point out the vary a kind can assist (the dynamic vary). For those who prepare a mannequin with such a kind, however the mannequin weights exceed this vary, you’re going to get overflow or underflow, normally inflicting the mannequin to output NaN or Inf. The eps worth is the smallest optimistic quantity such that the sort can differentiate between 1+eps and 1. This can be a metric for precision. In case your mannequin’s gradient updates are smaller than eps, you’ll doubtless observe the vanishing gradient downside.

Due to this fact, float32 is an efficient default selection for deep studying: it has a large dynamic vary and excessive precision. Nevertheless, every float32 quantity requires 4 bytes of reminiscence. As a compromise, you should utilize float16 to save lots of reminiscence, however you’re prone to encounter overflow or underflow points because the dynamic vary is far smaller.

The Google Mind group recognized this downside and proposed bfloat16, a 16-bit floating-point format with the identical dynamic vary as float32. As a trade-off, the precision is an order of magnitude worse than float16. It seems that dynamic vary is extra necessary than precision for deep studying, making bfloat16 extremely helpful.

If you create a tensor in PyTorch, you may specify the information kind. For instance:

|

x = torch.tensor([1.0, 2.0, 3.0], dtype=torch.float16) print(x) |

There’s a simple option to change the default to a special kind, reminiscent of bfloat16. That is helpful for mannequin coaching. All it’s essential to do is ready the next line earlier than you create any mannequin or optimizer:

|

# set default dtype to bfloat16 torch.set_default_dtype(torch.bfloat16) |

Simply by doing this, you power all of your mannequin weights and gradients to be bfloat16 kind. This protects half of the reminiscence. Within the earlier article, you had been suggested to set the batch dimension to eight to suit a GPU with solely 12GB of VRAM. With bfloat16, it’s best to be capable of set the batch dimension to 16.

Observe that making an attempt to make use of 8-bit float or lower-precision sorts might not work. It is because you want {hardware} assist and PyTorch to carry out the corresponding mathematical operations. You possibly can attempt the next code (requires a CUDA machine) and discover that you will want further effort to function on 8-bit float:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

dtype = torch.float8_e4m3fn

# Outline a tensor with float8 will see # NotImplementedError: “normal_kernel_cuda” not carried out for ‘Float8_e4m3fn’ x = torch.randn(16, 16, dtype=dtype, machine=“cuda”)

# Create in float32 and convert to float8 works x = torch.randn(16, 16, machine=“cuda”).to(dtype)

# However matmul just isn’t supported. You will note # NotImplementedError: “addmm_cuda” not carried out for ‘Float8_e4m3fn’ y = x @ x.T

# The right option to run matrix multiplication on 8-bit float y = torch._scaled_mm(x, x.T, out_dtype=dtype, scale_a=torch.tensor(1.0, machine=“cuda”), scale_b=torch.tensor(1.0, machine=“cuda”)) print(y) |

Computerized Combined Precision Coaching

Coaching a mannequin with float16 might encounter points as a result of not all operations needs to be carried out at decrease precision. For instance, matrix multiplication is powerful in decrease precision, however discount operations, pooling, and a few activation capabilities require float32.

You possibly can set the information kind manually for every element of your mannequin, however that is tedious since you should convert knowledge sorts between elements. A greater answer is to make use of computerized combined precision coaching in PyTorch.

PyTorch has a sub-library torch.amp that may robotically forged the information kind primarily based on the operation. Not all operations are carried out in the identical floating-point kind. If the operation is thought to be strong at decrease precision, this library will forged the tensors to that precision earlier than operating the operation. Therefore the title “combined precision”. Utilizing decrease precision might not solely save reminiscence but in addition velocity up coaching. Some GPUs can run float16 operations at twice the velocity of float32.

If you prepare a mannequin with torch.amp, all it’s essential to do is run your ahead go below the context of torch.amp.autocast(). Sometimes, additionally, you will use a GradScaler to deal with gradient scaling. That is vital as a result of below low precision, you might encounter vanishing gradients as a result of restricted precision of your floating-point kind. The GradScaler scales the gradient earlier than the backward go to forestall lack of gradient movement. Through the backward go, it’s best to scale the gradient again for correct updates. This course of could be cumbersome as a result of it’s essential to decide the right scale issue, which the GradScaler handles for you.

In comparison with the coaching loop from the earlier article, beneath is the way you usually use torch.amp to coach a mannequin:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

...

# Verify if combined precision coaching is supported assert torch.amp.autocast_mode.is_autocast_available(“cuda”)

# Creates a GradScaler earlier than the coaching loop scaler = torch.amp.GradScaler(“cuda”, enabled=True)

# begin coaching for epoch in vary(begin_epoch, epochs): pbar = tqdm.tqdm(dataloader, desc=f“Epoch {epoch+1}/{epochs}”) for batch_id, batch in enumerate(pbar): # get batched knowledge input_ids, target_ids = batch # create consideration masks: causal masks + padding masks attn_mask = create_causal_mask(input_ids.form[1], machine) + create_padding_mask(input_ids, PAD_TOKEN_ID, machine) # with autocasting to bfloat16, run the ahead go with torch.autocast(device_type=“cuda”, dtype=torch.bfloat16): logits = mannequin(input_ids, attn_mask) loss = loss_fn(logits.view(–1, logits.dimension(–1)), target_ids.view(–1)) # backward with loss, scaled by the GradScaler optimizer.zero_grad() scaler.scale(loss).backward() # step the optimizer and test if the dimensions has been up to date scaler.step(optimizer) old_scale = scaler.get_scale() scaler.replace() if scaler.get_scale() old_scale: scheduler.step() pbar.set_postfix(loss=loss.merchandise()) pbar.replace(1) pbar.shut() |

Utilizing AMP autocasting is easy: preserve the mannequin’s default precision at float32, then wrap the ahead go and loss computation with torch.autocast(). Below this context, all supported operations will run within the specified knowledge kind.

After getting the loss, let the GradScaler deal with the backward go. It can scale up the loss and replace the mannequin’s gradients. Nevertheless, this may occasionally trigger points if the scaling is just too giant, leading to NaN or Inf gradients. Due to this fact, use scaler.step(optimizer) to step the optimizer, which verifies the gradients earlier than executing the optimizer step. If GradScaler decides to not step the optimizer, it’ll scale back the dimensions issue when replace() is known as. Verify whether or not the dimensions has been up to date to find out should you ought to step the scheduler.

For the reason that backward go makes use of scaled loss, should you use gradient clipping, it’s best to unscale the gradients earlier than clipping. Right here’s the best way to do it:

|

... # backward with loss, scaled by the GradScaler optimizer.zero_grad() scaler.scale(loss).backward() # unscaled the gradients and apply gradient clipping scaler.unscale_(optimizer) torch.nn.utils.clip_grad_norm_(mannequin.parameters(), 1.0) # step the optimizer and test if the dimensions has been up to date scaler.step(optimizer) old_scale = scaler.get_scale() scaler.replace() if scaler.get_scale() old_scale: scheduler.step() |

Usually, you don’t have to name scaler.unscale_() manually because it’s a part of the scaler.step(optimizer) name. Nevertheless, you have to achieve this when making use of gradient clipping in order that the clipping operate can observe the precise gradients.

Autocasting is computerized, however the GradScaler maintains a state to trace the dimensions issue. Due to this fact, whenever you checkpoint your mannequin, you also needs to save the scaler.state_dict(), simply as you’d save the optimizer state:

|

... # Loading checkpoint checkpoint = torch.load(“training_checkpoint.pth”) mannequin.load_state_dict(checkpoint[“model”]) optimizer.load_state_dict(checkpoint[“optimizer”]) scheduler.load_state_dict(checkpoint[“scheduler”]) scaler.load_state_dict(checkpoint[“scaler”])

# Saving checkpoint torch.save({ “mannequin”: mannequin.state_dict(), “optimizer”: optimizer.state_dict(), “scheduler”: scheduler.state_dict(), “scaler”: scaler.state_dict(), }, f“training_checkpoint.pth”) |

Gradient Checkpointing

If you prepare a mannequin with half precision, you utilize half the reminiscence in comparison with 32-bit float. With mixed-precision coaching, you might use barely extra reminiscence as a result of not all operations run at decrease precision.

For those who nonetheless encounter reminiscence points, one other method trades time for reminiscence: gradient checkpointing. Recall that in deep studying, for a operate $y=f(mathbb{u})$ and $mathbb{u}=g(mathbb{x}))$, then

$$

frac{partial y}{partial mathbb{x}} = large(frac{partial mathbb{u}}{partial mathbb{x}}large)^high frac{partial y}{partial mathbb{u}}

$$

the place $y$ is a scalar (normally the loss metric), and $mathbb{u}$ and $mathbb{x}$ are vectors. The time period $frac{partial mathbb{u}}{partial mathbb{x}}$ is the Jacobian matrix of $mathbb{u}$ with respect to $mathbb{x}$.

The gradient $frac{partial y}{partial mathbb{x}}$ is required to replace $mathbb{x}$ however depends upon $frac{partial y}{partial mathbb{u}}$. Usually, whenever you run the ahead go, all intermediate outcomes reminiscent of $mathbb{u}$ are saved in reminiscence in order that whenever you run the backward go, you may readily compute the gradient $frac{partial y}{partial mathbb{u}}$. Nevertheless, this requires substantial reminiscence for deep networks.

Gradient checkpointing discards some intermediate outcomes. So long as you recognize $mathbb{u}=g(mathbb{x})$, you may recompute $mathbb{u}$ from $mathbb{x}$ throughout the backward go. This fashion, you don’t have to retailer $mathbb{u}$ in reminiscence, however you have to compute $mathbb{u}$ twice: as soon as for the ahead go and as soon as for the backward go.

You possibly can resolve which intermediate outcomes to discard. Making use of gradient checkpointing to each two operations nonetheless requires storing many intermediate outcomes. Making use of it to bigger blocks saves extra reminiscence.

Referring to the mannequin from the earlier article, you may wrap each transformer block with gradient checkpointing:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

... class LlamaModel(nn.Module): def __init__(self, config: LlamaConfig) -> None: tremendous().__init__() self.rotary_emb = RotaryPositionEncoding( config.hidden_size // config.num_attention_heads, config.max_position_embeddings, )

self.embed_tokens = nn.Embedding(config.vocab_size, config.hidden_size) self.layers = nn.ModuleList([LlamaDecoderLayer(config) for _ in range(config.num_hidden_layers)]) self.norm = nn.RMSNorm(config.hidden_size, eps=1e–5)

def ahead(self, input_ids: Tensor, attn_mask: Tensor) -> Tensor: # Convert enter token IDs to embeddings hidden_states = self.embed_tokens(input_ids) # Course of by way of all transformer layers, then the ultimate norm layer for layer in self.layers: # Beforehand: # hidden_states = layer(hidden_states, rope=self.rotary_emb, attn_mask=attn_mask) hidden_states = torch.utils.checkpoint.checkpoint(layer, hidden_states, self.rotary_emb, attn_mask) hidden_states = self.norm(hidden_states) # Return the ultimate hidden states return hidden_states |

Just one line of code wants to vary: within the for-loop below the ahead() operate, as an alternative of calling the transformer block straight, use torch.utils.checkpoint.checkpoint(). This runs the ahead go with gradient checkpointing, discarding all intermediate outcomes and retaining solely the block’s enter and output. Through the backward go, the intermediate outcomes are quickly recomputed utilizing the enter.

Additional readings

Under are some assets that you could be discover helpful:

Abstract

On this article, you realized strategies for coaching a language mannequin with restricted reminiscence. Particularly, you realized that:

- A number of varieties of floating-point numbers exist, with some utilizing much less reminiscence than others.

- Combined-precision coaching robotically makes use of lower-precision floating-point numbers with out sacrificing accuracy on important operations.

- Gradient checkpointing trades time for reminiscence throughout coaching.