Character.AI, a preferred chatbot platform the place customers role-play with completely different personas, will now not allow under-18 account holders to have open-ended conversations with chatbots, the corporate introduced Wednesday. It should additionally start counting on age assurance strategies to make sure that minors aren’t capable of open grownup accounts.

The dramatic shift comes simply six weeks after Character.AI was sued once more in federal courtroom by the Social Media Victims Legislation Middle, which is representing a number of mother and father of teenagers who died by suicide or allegedly skilled extreme hurt, together with sexual abuse. The mother and father declare their youngsters’s use of the platform was liable for the hurt. In October 2024, Megan Garcia filed a wrongful loss of life go well with searching for to carry the corporate liable for the suicide of her son, arguing that its product is dangerously faulty. She is represented by the Social Media Victims Legislation Middle and the Tech Justice Legislation Undertaking.

On-line security advocates just lately declared Character.AI unsafe for teenagers after they examined the platform this spring and logged lots of of dangerous interactions, together with violence and sexual exploitation.

Because it confronted authorized stress within the final 12 months, Character.AI applied parental controls and content material filters in an effort to enhance security for teenagers.

In an interview with Mashable, Character.AI’s CEO Karandeep Anand described the brand new coverage as “daring” and denied that curbing open-ended chatbot conversations with teenagers was a response to particular security issues.

As an alternative, Anand framed the choice as “the correct factor to do” in mild of broader unanswered questions in regards to the long-term results of chatbot engagement on teenagers. Anand referenced OpenAI’s latest acknowledgement, within the wake of a teen person’s suicide, that prolonged conversations can change into unpredictable.

Anand solid Character.AI’s new coverage as standard-setting: “Hopefully it units everybody up on a path the place AI can proceed being secure for everybody.”

He added that the corporate’s resolution will not change, no matter person backlash.

Matthew P. Bergman, Garcia’s co-counsel in her wrongful loss of life lawsuit towards Character.AI, informed Mashable in an announcement that the corporate’s announcement marked a “vital step towards making a safer on-line setting for youngsters.”

He credited Garcia and different mother and father for coming ahead to carry the corporate accountable. Although he counseled Character.AI for shutting down teen chats, Bergman stated the choice wouldn’t have an effect on ongoing litigation towards the corporate.

Meetali Jain, who additionally represents Garcia, stated in an announcement that she welcomed the brand new coverage as a “good first step” towards making certain that Character.AI is safer. But she added that the pivot mirrored a “basic transfer in tech business’s playbook: transfer quick, launch a product globally, break minds, after which make minimal product modifications after harming scores of younger individuals.”

Mashable Pattern Report

Jain famous that Character.AI has but to handle the “attainable psychological affect of immediately disabling entry to younger customers, given the emotional dependencies which have been created.”

What is going to Character.AI seem like for teenagers now?

In a weblog put up saying the brand new coverage, Character.AI apologized to its teen customers.

“We don’t take this step of eradicating open-ended Character chat frivolously — however we do assume that it is the proper factor to do given the questions which have been raised about how teenagers do, and will, work together with this new expertise,” the weblog put up stated.

Presently, customers ages 13 to 17 can message with chatbots on the platform. That function will stop to exist no later than November 25. Till then, accounts registered to minors will expertise cut-off dates beginning at two hours per day. That restrict will lower because the transition away from open-ended chats will get nearer.

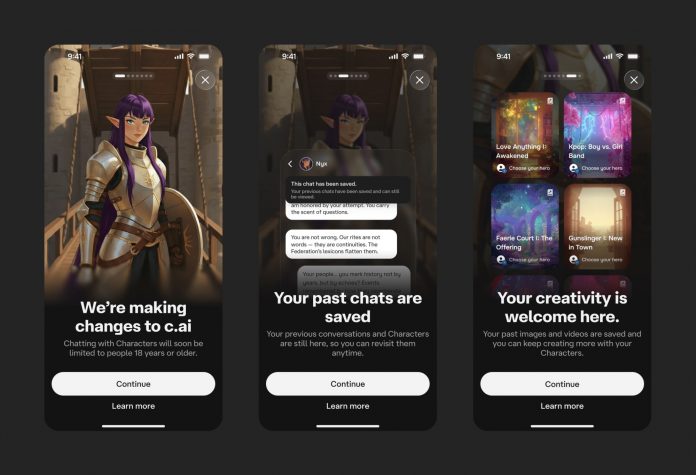

Character.AI will see these notifications about impending modifications to the platform.

Credit score: Courtesy of Character.AI

Despite the fact that open-ended chats will disappear, teenagers’ chat histories with particular person chatbots will stay in tact. Anand stated customers can draw on that materials as a way to generate brief audio and video tales with their favourite chatbots. Within the subsequent few months, Character.AI will even discover new options like gaming. Anand believes an emphasis on “AI leisure” with out open-ended chat will fulfill teenagers’ artistic curiosity within the platform.

“They’re coming to role-play, and so they’re coming to get entertained,” Anand stated.

He was insistent that present chat histories with delicate or prohibited content material that will not have been beforehand detected by filters, corresponding to violence or intercourse, wouldn’t discover its manner into the brand new audio or video tales.

A Character.AI spokesperson informed Mashable that the corporate’s belief and security workforce reviewed the findings of a report co-published in September by the Warmth Initiative documenting dangerous chatbot exchanges with take a look at accounts registered to minors. The workforce concluded that some conversations violated the platform’s content material pointers whereas others didn’t. It additionally tried to duplicate the report’s findings.

“Primarily based on these outcomes, we refined a few of our classifiers, consistent with our objective for customers to have a secure and fascinating expertise on our platform,” the spokesperson stated.

Sarah Gardner, CEO of the Warmth Initiative, informed Mashable that the nonprofit group could be paying shut consideration to the implementation of Character.AI’s new insurance policies to make sure they are not “simply one other spherical of kid security theater.”

Whereas she described the measures as a “optimistic signal,” she argued that the announcement “can also be an admission that Character AI’s merchandise have been inherently unsafe for younger customers from the start, and that their earlier security rollouts have been ineffective in defending youngsters from hurt.”

Character.AI will start implementing age assurance instantly. It’s going to take a month to enter impact and can have a number of layers. Anand stated the corporate is constructing its personal assurance fashions in-house however that it’ll accomplice with a third-party firm on the expertise.

It should additionally use related knowledge and indicators, corresponding to whether or not a person has a verified over-18 account on one other platform, to precisely detect the age of latest and present customers. Lastly, if a person desires to problem Character.AI’s age dedication, they’re going to have the chance to supply verification by way of a 3rd occasion, which can deal with delicate paperwork and knowledge, together with state-issued identification.

Lastly, as a part of the brand new insurance policies, Character.AI is establishing and funding an unbiased non-profit known as the AI Security Lab. The lab will concentrate on “novel security strategies.”

“[W]e wish to convey within the business consultants and different companions to maintain ensuring that AI continues to stay secure, particularly within the realm of AI leisure,” Anand stated.

UPDATE: Oct. 29, 2025, 10:12 a.m. PDT This story has been up to date to incorporate feedback from authorized counsel and security consultants on Character.AI’s new insurance policies.