Editor’s observe: This put up is a part of Assume SMART, a collection targeted on how main AI service suppliers, builders and enterprises can enhance their inference efficiency and return on funding with the newest developments from NVIDIA’s full-stack inference platform.

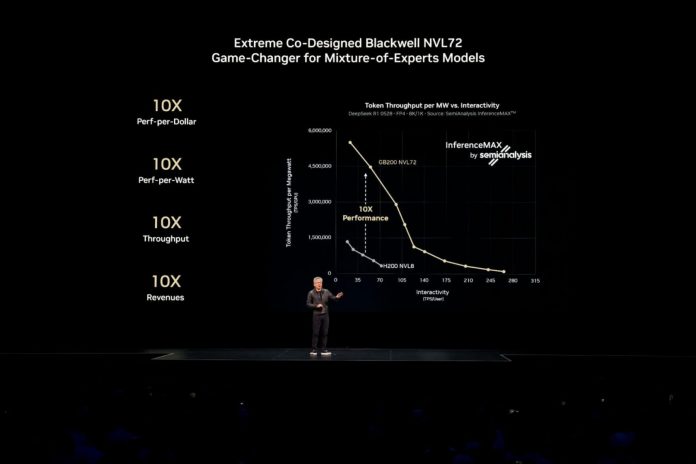

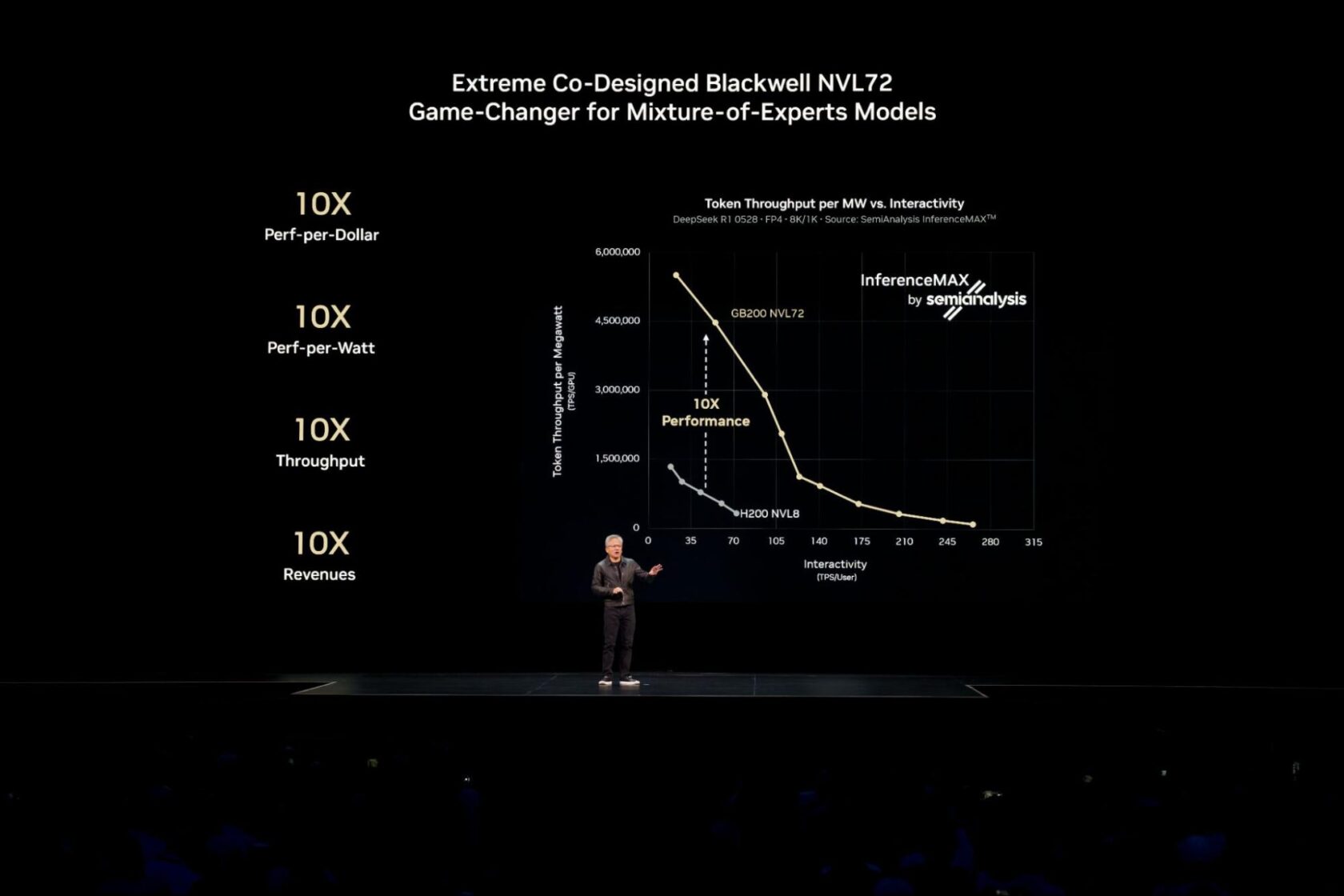

NVIDIA Blackwell delivers the best efficiency and effectivity, and lowest complete value of possession throughout each examined mannequin and use case within the current unbiased SemiAnalysis InferenceMAX v1 benchmark.

Reaching this industry-leading efficiency for right now’s most advanced AI fashions, akin to large-scale mixture-of-experts (MoE) fashions, requires distributing (or disaggregating) inference throughout a number of servers (nodes) to serve thousands and thousands of concurrent customers and ship sooner responses.

The NVIDIA Dynamo software program platform unlocks these highly effective multi-node capabilities for manufacturing, enabling enterprises to attain this similar benchmark-winning efficiency and effectivity throughout their current cloud environments. Learn on to find out how the shift to multi-node inference is driving efficiency, in addition to how cloud platforms are placing this expertise to work.

Tapping Disaggregated Inference for Optimized Efficiency

For AI fashions that match on a single GPU or server, builders typically run many similar replicas of the mannequin in parallel throughout a number of nodes to ship excessive throughput. In a current paper, Russ Fellows, principal analyst at Signal65, confirmed that this strategy achieved an industry-first document mixture throughput of 1.1 million tokens per second with 72 NVIDIA Blackwell Extremely GPUs.

When scaling AI fashions to serve many concurrent customers in actual time, or when managing demanding workloads with lengthy enter sequences, utilizing a method known as disaggregated serving unlocks additional efficiency and effectivity positive factors.

Serving AI fashions entails two phases: processing the enter immediate (prefill) and producing the output (decode). Historically, each phases run on the identical GPUs, which might create inefficiencies and useful resource bottlenecks.

Disaggregated serving solves this by intelligently distributing these duties to independently optimized GPUs. This strategy ensures that every a part of the workload runs with the optimization strategies greatest fitted to it, maximizing total efficiency. For right now’s giant AI reasoning and MoE fashions, akin to DeepSeek-R1, disaggregated serving is important.

NVIDIA Dynamo simply brings options like disaggregated serving to manufacturing scale throughout GPU clusters.

It’s already delivering worth.

Baseten, for instance, used NVIDIA Dynamo to hurry up inference serving for long-context code era by 2x and improve throughput by 1.6x, all with out incremental {hardware} prices. Such software-driven efficiency boosts allow AI suppliers to considerably cut back the prices to fabricate intelligence.

Scaling Disaggregated Inference within the Cloud

Very like it did for large-scale AI coaching, Kubernetes — the {industry} commonplace for containerized utility administration — is well-positioned to scale disaggregated serving throughout dozens and even a whole lot of nodes for enterprise-scale AI deployments.

With NVIDIA Dynamo now built-in into managed Kubernetes companies from all main cloud suppliers, clients can scale multi-node inference throughout NVIDIA Blackwell techniques, together with GB200 and GB300 NVL72, with the efficiency, flexibility and reliability that enterprise AI deployments demand.

- Amazon Net Providers is accelerating generative AI inference for its clients with NVIDIA Dynamo and built-in with Amazon EKS.

- Google Cloud is offering Dynamo recipe to optimize giant language mannequin (LLM) inference at enterprise scale on its AI Hypercomputer.

- Microsoft Azure is enabling multi-node LLM inference with NVIDIA Dynamo and ND GB200-v6 GPUs on Azure Kubernetes Service.

- Oracle Cloud Infrastructure (OCI) is enabling multi-node LLM inferencing with OCI Superclusters and NVIDIA Dynamo.

The push in direction of enabling large-scale, multi-node inference extends past hyperscalers.

Nebius, for instance, is designing its cloud to serve inference workloads at scale, constructed on NVIDIA accelerated computing infrastructure and dealing with NVIDIA Dynamo as an ecosystem associate.

Simplifying Inference on Kubernetes With NVIDIA Grove in NVIDIA Dynamo

Disaggregated AI inference requires coordinating a group of specialised parts — prefill, decode, routing and extra — every with totally different wants. The problem for Kubernetes is now not about working extra parallel copies of a mannequin, however somewhat about masterfully conducting these distinct parts as one cohesive, high-performance system.

NVIDIA Grove, an utility programming interface now out there inside NVIDIA Dynamo, permits customers to offer a single, high-level specification that describes their complete inference system.

For instance, in that single specification, a consumer may merely declare their necessities: “I want three GPU nodes for prefill and 6 GPU nodes for decode, and I require all nodes for a single mannequin reproduction to be positioned on the identical high-speed interconnect for the quickest potential response.”

From that specification, Grove robotically handles all of the intricate coordination: scaling associated parts collectively whereas sustaining right ratios and dependencies, beginning them in the suitable order and putting them strategically throughout the cluster for quick, environment friendly communication. Be taught extra about tips on how to get began with NVIDIA Grove on this technical deep dive.

As AI inference turns into more and more distributed, the mix of Kubernetes and NVIDIA Dynamo with NVIDIA Grove simplifies how builders construct and scale clever functions.

Attempt NVIDIA’s AI-at-scale simulation to see how {hardware} and deployment decisions have an effect on efficiency, effectivity and consumer expertise. To dive deeper on disaggregated serving and find out how Dynamo and NVIDIA GB200 NVL72 techniques work collectively to spice up inference efficiency, learn this technical weblog.

For month-to-month updates, join the NVIDIA Assume SMART e-newsletter.